A just-published study indicates that runners who increase their stride rate could substantially improve their running efficiency and performance. In the University of New Hampshire trial, runners lowered their submax oxygen consumption (that’s a good thing) by 11 percent when they upped stride rates from 170/min to 180.

A lower submax oxygen consumption should lead to faster endurance race times. However, actual improvement in performance wasn’t tested in the New Hampshire study, due to lack of funding and additional demands on the runner-subjects, acknowledges senior author Tim Quinn, professor of kinesiology.

Runners have long been interested in strides rates for three solid reasons. First, stride rate (frequency) is something you can rather easily change, unlike height, muscle fiber, cardiac dimensions, and so on.

Second, a change in stride rate can lower your risk of injury. Third, it may improve efficiency and performance. That’s pretty much a winning trifecta.

But it leaves a big, unanswered question: What’s your perfect stride rate?

Search for the Ideal Cadence

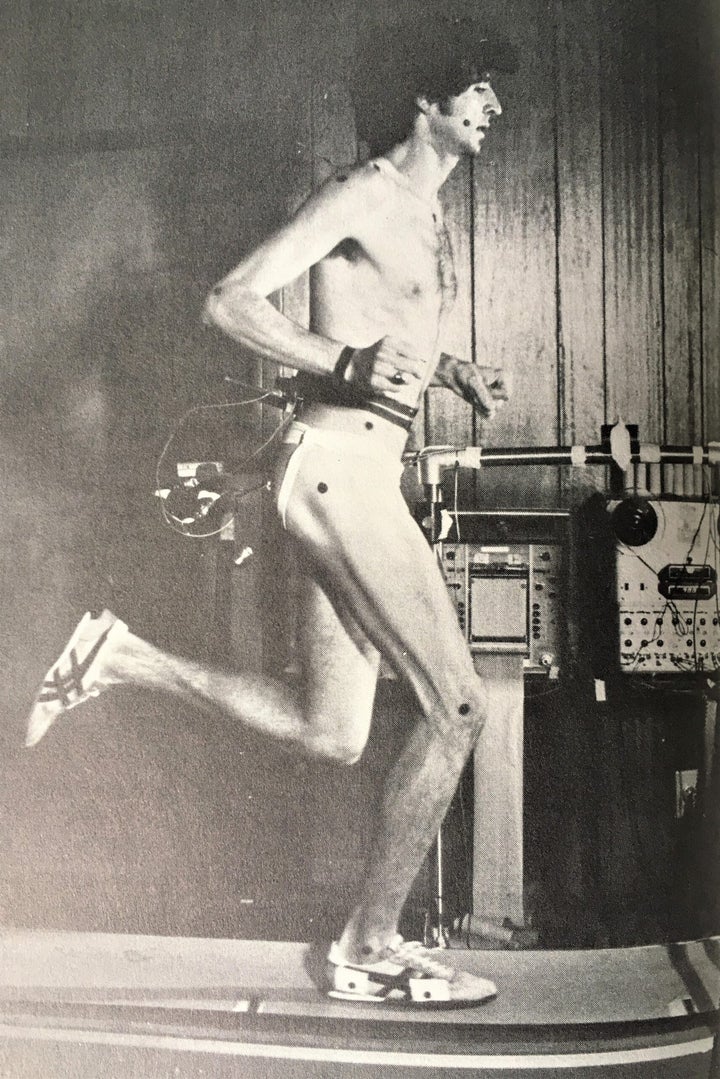

The history of stride-rate studies has had two major inflection points, each associated with a research giant: biomechanist Peter Cavanagh and exercise physiologist Jack Daniels. In the late 1970s and early 1980s, Cavanagh conducted lab tests to investigate the relationship of stride rate to oxygen consumption. He found that many top runners had a submax stride rate around 175/minute, that they tended to settle naturally into this rate after several years of regular running, and that both lower and higher stride rates were less efficient.

It’s interesting to note that Cavanagh’s 1982 definition of a “recreational runner” was one with an oxygen consumption sufficient to run 16:00 for 5K and 2:35 in the marathon. He also measured “good” and “elite” runners in his Penn State Lab. The latter included the likes of Bill Rodgers and Frank Shorter, who, in one study, ran at 5:24/mile pace with an average stride rate of 191/min vs 180 for “good” runners.

Rodgers remembers his visit to Cavanagh’s lab a week after his 1978 New York City Marathon win for what he learned about his famously-flopping-and-snapping right arm. “Cavanagh told me I had a shorter left leg, so the right arm swung out to balance the opposite leg,” Rodgers says.

He doesn’t remember his measured stride rate, but notes: “We did some pretty weird stuff on the treadmill with really bright lights shining in our eyes.” The lights might been positioned by Cavanagh’s staff or by the PBS NOVA TV crew that filmed Rodgers’s visit to the lab.

In 1984, Jack Daniels and his wife, Nancy, sat for 10 days in the middle of the homestretch at the LA Olympics without seeing much of the track action. They were too busy counting. One would count off 20 or 30 seconds on a stopwatch while the other focused on the stride rate of a particular runner.

In this low-tech manner, they determined average stride rates for every distance from the 800 meters to the marathon. They found that the 800 stood apart; it demanded a stride rate of 200+. From the 1500 to the marathon, surprisingly, rates differed little. All were near or just above 180.

They gave particular attention to the all-important last-lap sprint, wondering what top runners did to accelerate so dramatically. All but one achieved this by increasing both stride rate and stride length. The exception: Maricica Puica, winner of the women’s 3000 meter final (the Mary Decker vs Zola Budd affair). Puica sprinted the last 200 in 31 seconds (compared to about 35 seconds/200 up to then) without any increase in stride rate. She did it all with muscle (longer strides).

Testing the Lore

Daniels never published his stride-rate findings in a research journal, but discussed them in his popular Daniels Running Formula and in many talks to coaches. Before long, the 180 strides/minute dictum insinuated itself into training and racing lore. Yet no one bothered to test it in the precise manner adopted by Quinn.

He knew the full history of running-stride research, and also that few coaches tried to mess with their veteran runners. The coaches assumed these runners had probably settled naturally into their optimal stride. Quinn’s alternative theory: Since few typical runners are similar to Cavanagh’s superfit subjects or Daniels’s Olympians, they might not have found their best stride rate.

To test this hypothesis, he assembled 22 experienced college-aged women runners (average 5K time of about 19:50), and divided them into two groups. Both groups began with an average stride rate of about 170.

In the experiment, one group continued training as usual for 10 days. The other received a daily 15-minute treadmill session with a metronome set to 180 beats/minute. They were instructed to adjust their stride rates upward to match the metronome.

They were also told to maintain this cadence in their outdoor training runs after the treadmill session. A training-log analysis showed that both groups did about the same kinds and amounts of training through the 10-day period.

Before and after the training period, subjects had their oxygen consumption and heart rate measured in a sub-max treadmill test at 7:03/mile. The post-training results showed that members of the 180 group had lowered their oxygen consumption by an average of 11 percent and their heart rate by an average of 5 percent vs the as-usual group.

While Quinn measured only women, there’s no reason to think the results would be different for men. “The training worked well, and the runners adapted to the increased rate pretty quickly,” he adds. “Almost all of them said they felt more comfortable at the higher rate. We’re not saying that 180 is a ‘magic number’ but it seems reasonable to strive to get close to that.”

Faster is Relative

Quinn and his team didn’t investigate injury-prevention. But the University of Wisconsin’s Bryan Heiderscheit, an expert in that area, thinks the shorter strides that accompany faster strides would likely lower ground reaction forces. “That could have a positive effect on injury risk,” he says.

Heiderscheit also notes that a jump to 180 strides might be excessive for slower runners than Quinn’s sample. They were able to race 5K in under 20 minutes, and had a submax stride rate of about 170 strides. Since stride rate is speed dependent, slower racers (say 27 minutes for 5K) would likely have a stride rate around 160. These runners might do well by increasing to 170, while 180 might be too high and cause detrimental alterations in their stride.

Besides risking injury, Heiderscheit says, “If you make too big of a change, you’ll notice a dramatic increase in your oxygen cost—it’s going to make running pretty miserable for you. So you want to keep that change no more than 5–10% at a time, just to let your body adapt to it.”

Better than Super Shoes

It’s the unanswered question about performance-improvement that many runners will find most enticing in the new study. Remember: Some Nike shoes are promising a 4 to 5 percent reduction in oxygen consumption. The Quinn study produced more than double that—an 11 percent reduction.

No one is claiming faster strides will reduce your times by 11 percent, however. Most experts believe the absolute race improvement is about 60 to 70 percent of the percent reduction in oxygen consumption. Hence, .6 x 11 = a 6.6 percent improvement in race times.

Which is huge. Big enough to see if you can get your stride rate up to 180, or at least substantially higher than at present.

When it Rains…

Another brand-new stride-rate study is the first to take runners out of the lab and into the real-world environment where we actually run. This research team, from The Netherlands, found that most of their subjects hit their most efficient stride frequency, measured by heart rate, at roughly six strides per minute higher than their normal rate. However, the efficiency difference between normal and optimal stride rate was so low that the researchers concluded: “There seemed little to be gained by increasing stride frequency.”

Still, for runners looking to maximize their efforts, improvement is improvement. In sum, these new studies indicate that an increase in your normal cadence—taking quicker steps and spending less time on the ground—can likely make you more efficient, and thus faster.